← Salvatore Zappalà's Weblog

Agency must stay with humans: AI velocity risks outpacing programmers' understanding

TL;DR: As AI evolves from writing syntax to autonomous planning, the sheer quantity of generated code is outpacing our ability to comprehend it. We are moving from creators to curators, risking a future where human agency is sacrificed for speed.

LLMs have been decent at writing software since July 2023, when Anthropic’s Claude 2 was introduced. That’s roughly when I started integrating them in my workflow, both in my day to day job and in my “hobby” personal programming. Back then, I was not too concerned about my role being automated, because I knew very well that:

- LLMs were not as good as a decent human programmer at writing code

- Writing code is only a small part of the job of a software engineer

Since then, (1) is not necessarily true in many domains. Yes, I sometimes need to steer AI in the right direction, but the sheer ability that modern LLMs have to write code in any popular language is remarkable.

Regarding (2): true, writing code is only a small part of the job of a software engineer. I’m not going to list all of the things that are part of my job that are not coding, but a big one is the ability to come up with a plan to build an entire system that is coherent, testable, maintainable, future-proof.

This requires the ability to reason, and LLMs have recently got much better at reasoning. Especially in the field of software engineering, where aggressive reinforcement learning techniques can be applied more than in other, less rigorous, fields.

It became especially true in November 2025, when Claude Opus 4.5 was introduced. Regardless of benchmarks, which I’m not a fan of, it’s easy to agree that this is one of the best LLMs, if not the best, when it comes to building software.

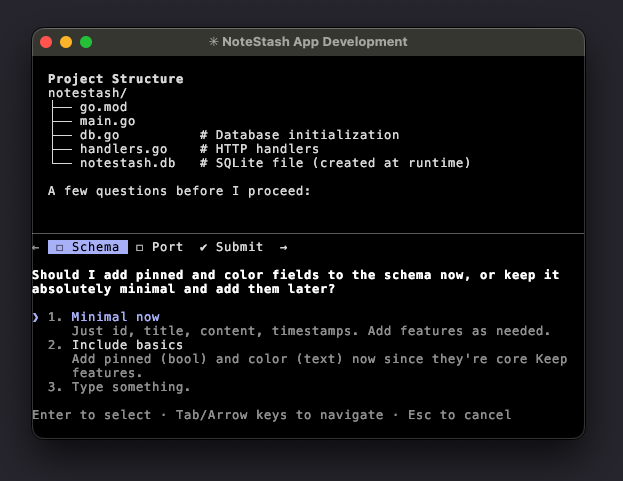

Another important factor is that LLMs are getting better at using tools, and the tools themselves are improving. A very powerful feature that is present in Claude Code CLI, which I haven’t seen in other interfaces yet, is Plan mode, where the model develops the original prompt into a more structured “plan” prompt that contains more details. Crucially, Claude has the ability to ask clarifying questions to the user before going ahead.

Planning mode in Claude Code CLI, asking for user input

Planning mode in Claude Code CLI, asking for user input

This feature is probably enabled by the CLI tool prompting the underlying LLM to ask any clarifying questions. On top of that Claude has got inherently better at multi-turn tasks, in which the model stops doing a task and comes up with a better plan before continuing, once it makes sense.

From the Claude Opus 4.5 release notes: (emphasis mine)

In one scenario, models have to act as an airline service agent helping a distressed customer. The benchmark expects models to refuse a modification to a basic economy booking since the airline doesn’t allow changes to that class of tickets. Instead, Opus 4.5 found an insightful (and legitimate) way to solve the problem: upgrade the cabin first, then modify the flights

I think that we’re still some way off from the job of software engineer being automated, because the two “skills” that we discussed are still far from enough to make a complete, valuable software engineer. But it’s clear that some parts of the job are being automated.

Being able to write code out of thin air was something that I was proud of, and the fact that now an LLM is able to do it is a humbling reckoning. It devalues this once rare and sought-after skill. It’s not a surprise that, while the rate of adoption of these tools is increasing a lot among programmers, some people still have strong feelings against them.

On the other end of the spectrum, there are already people running their “agents” in loops to build software with little or no supervision at all. This was famously defined, in a viral tweet by Andrej Karpathy, as vibe coding. A term that is still very controversial, loved by some and hated by many.

Personally, I think that vibe coding is still only good for throwaway code. While the result is increasing in quality over time, there’s still a high risk of either:

- Making mistakes that are compounding and the LLM going down that rabbit hole, beyond the point of recovery

- Reaching “runaway complexity” where the code becomes too complex for an LLM to reason about holistically, and requires a huge amount of effort from an experienced human to then refactor and de-entangle

(2) was common with LLMs from one-two years ago; it’s still true today, but less than before.

We can’t predict the future, so we don’t know if LLMs, or other similar entities, will be able to overcome these obstacles anytime soon, but I wouldn’t be surprised if they will.

A tweet that I found particularly surprising, was from Boris Cherny, the initial creator of Claude Code CLI itself, which was a “personal project at work” before it became a flagship product of Anthropic.

According to him:

[…] In the last thirty days, I landed 259 PRs – 497 commits, 40k lines added, 38k lines removed. Every single line was written by Claude Code + Opus 4.5. […]

I trust that these days, at Anthropic, there is a reasonably sized team that reviews this code, so perhaps it’s still possible that humans have the final decision and a holistic understanding of the system. I want to hope, and I believe, that it’s not “vibe coded” yet.

However, at this rate, it’s becoming increasingly difficult to keep up with the code that is written by LLMs.

When I build anything that is not throwaway code, I have a hard rule: I keep control of git, and I don’t commit any code that I don’t fully understand. But lately, it’s getting harder and harder. When a well crafted plan is provided to Claude (either crafted by an experienced programmer, or co-developed with Claude in “plan mode”), it can churn away for hours and provide a fully fledged, reasonably complex system.

This worries me. Not only because there are gargantuan risks with having AI “agents” going unsupervised, which is a separate topic worth its own blog post, but most importantly:

I don’t like the idea of a world where agency, in its true meaning, doesn’t belong to humans anymore.